The Swiss Cheese Problem: Why AI Agents Need Symbolic Backbone

AI agents show superhuman skill yet still fail in simple ways — a paradox known as the “Swiss cheese problem.” The solution lies in neuro-symbolic integration: combining neural networks’ creativity with the rigour of symbolic logic. Knowledge Graphs provide the missing backbone enterprises need for reliable, trustworthy AI.

Why Early Knowledge Graph Adopters Will Win the AI Race

Knowledge graphs are moving from niche to mainstream. Early adopters who embrace ontologies and semantic layers are already seeing measurable business impact. Here’s guidance for building your own successful knowledge graph.

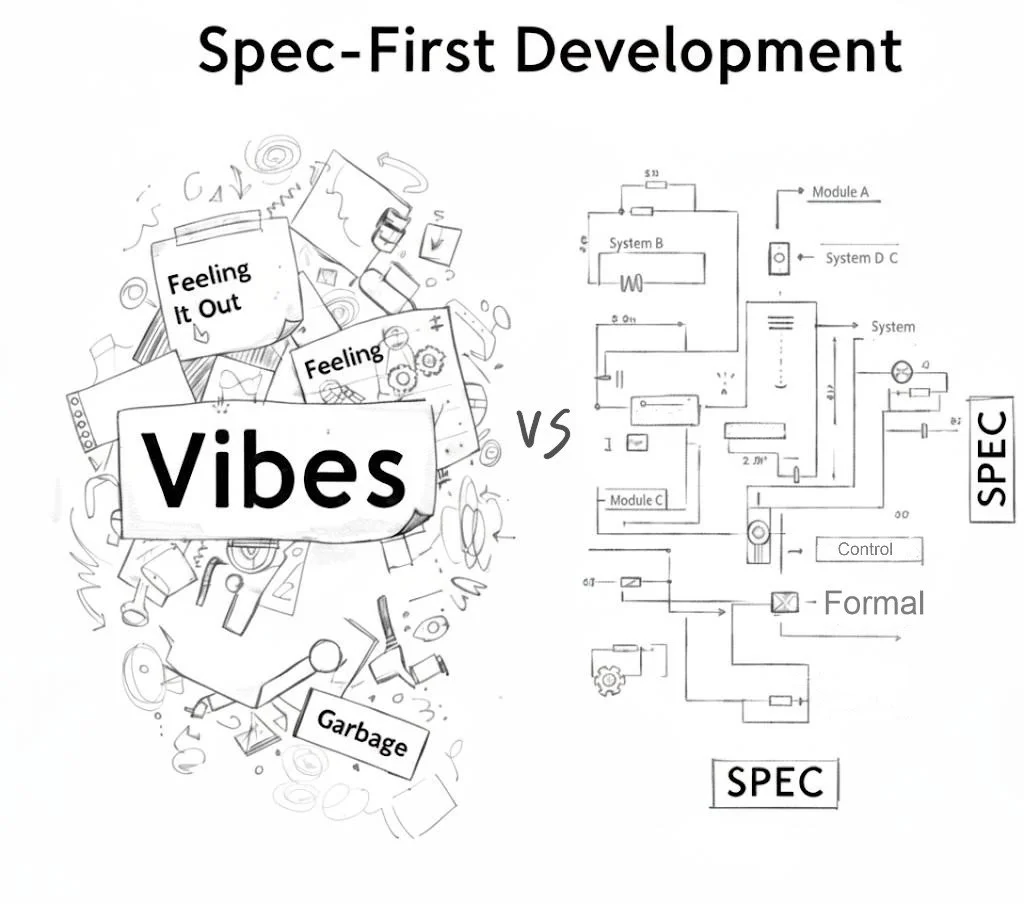

Spec-First Development: Why LLMs Thrive on Structure, Not Vibes

Vibe coding has its thrills, but LLMs shine when paired with formal specifications. Learn how test-driven practices, ontologies, and knowledge graphs turn ambiguity into executable, structured intent.

Context Rot: Why Bigger Context Windows Aren’t the Answer for Retrieval

Bigger context windows don’t automatically improve retrieval. Real gains come from reasoned, precise context—structured and guided by ontologies and knowledge graphs.

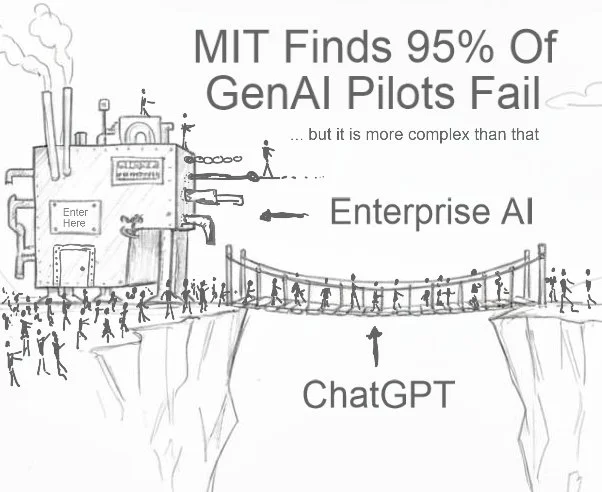

The GenAI Divide: Why 95% of Enterprise AI Pilots Fail

95% of enterprise GenAI pilots fail—but it’s not the models’ fault. The winners connect data, enforce clear semantics, and wrap LLMs in formal ontologies for trustworthy, validated AI.

Integration Isn’t Optional: Why AI-Ready Data Needs URIs and Ontologies

The Semantic Web isn’t the problem—distributed data integration is. For AI agents to act and reason effectively, organisations need clear semantics, stable URIs, and shared ontologies baked into their data products.

Walmart’s SuperAgents: Why Semantics and Knowledge Graphs Are the Real Foundation

Walmart’s SuperAgents highlight the surface magic of AI orchestration. But the real value lies beneath: shared semantics, aligned ontologies, and a long-term investment in knowledge graphs that let agents coordinate reliably.

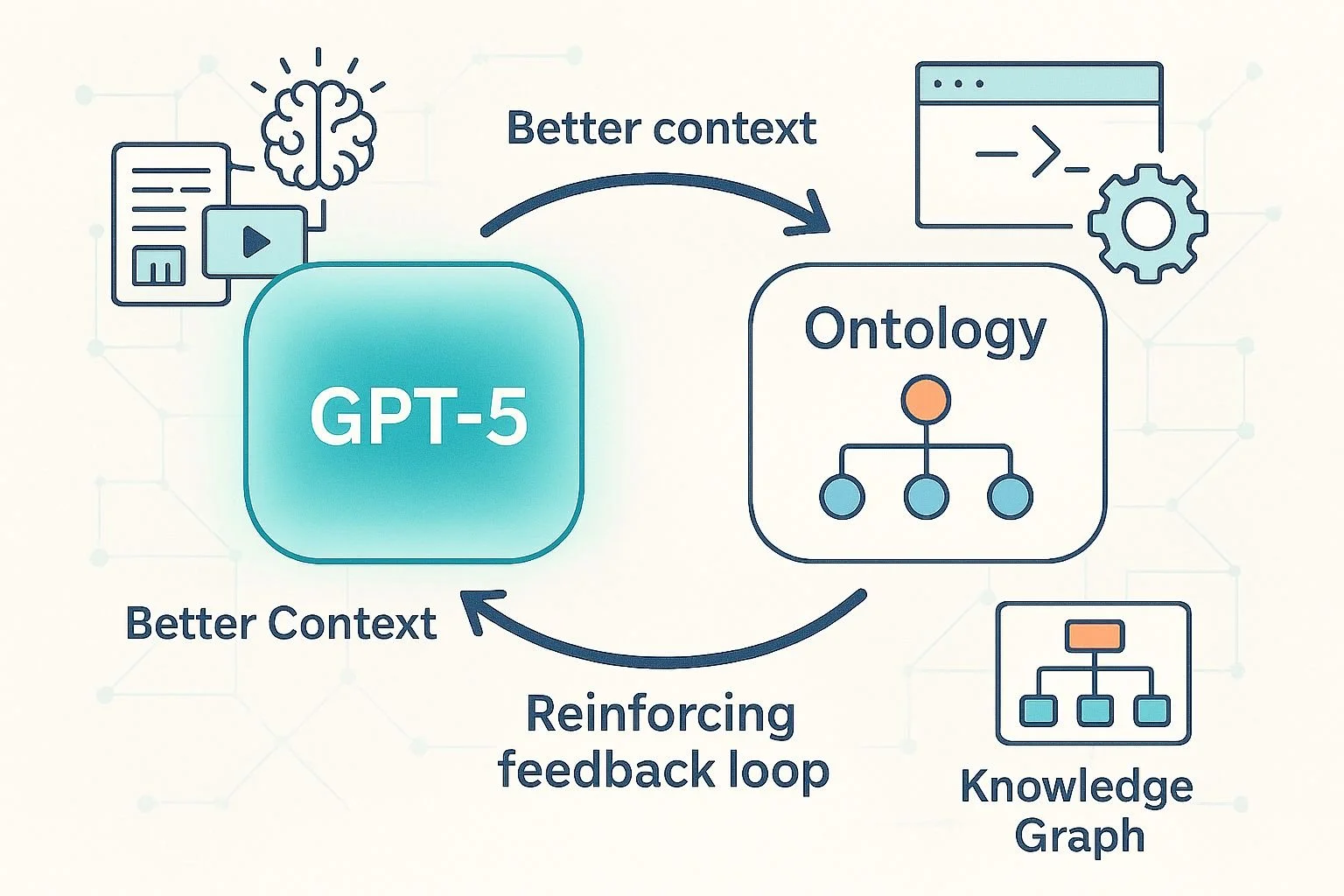

Revisiting the Neural-Symbolic Loop: GPT-5 and Ontologies in Tandem

With GPT-5, the synergy between LLMs and ontologies is clearer than ever. Larger context, multimodal input, and tool use let models help build ontologies — and ontologies, in turn, strengthen LLM reasoning, creating a self-reinforcing loop of improvement.

From Tables to Meaning: Building True Data Products with Ontologies

‘Semantics’ is often misused, yet it defines the very essence of meaning. Large Language Models hold a latent, globalised semantics — but not yours. True differentiation lies in owning your meaning through Knowledge Graphs and ontologies that reflect your reality, not the world’s average.

Semantics Is Meaning: The Hidden Structure That Makes You Unique

‘Semantics’ is often misused, yet it defines the very essence of meaning. Large Language Models hold a latent, globalised semantics — but not yours. True differentiation lies in owning your meaning through Knowledge Graphs and ontologies that reflect your reality, not the world’s average.

From Transduction to Abduction: Building Disciplined Reasoning in AI

Large language models excel at transduction — drawing analogies across cases — and hint at induction, learning patterns from data. But true reasoning demands abduction: generating structured explanations. By pairing LLMs with ontologies and symbolic logic, organisations can move beyond fuzzy resemblance toward grounded, conceptual intelligence.

Co-Creative Intelligence: When AI Agents Help Build Their Own World

AI agents that work with knowledge graphs aren’t just operating inside a static environment — they’re helping to build it. This co-creative relationship mirrors the dynamics of active inference, where intelligence emerges from continuous interaction between beliefs and reality. The real differentiator isn’t better algorithms, but better ontologies — clearer models of the world aligned with organisational strategy.

URLs for Data: The Key to Scalable Data Marketplaces

All functioning marketplaces rely on shared standards — and data marketplaces are no exception. The key lies in universal identifiers. Borrowing from the Semantic Web, the use of resolvable URLs for data items offers a simple, scalable way to unify fragmented data estates and enable decentralised coordination across the enterprise.

A Pause for Thought

After years of rapid breakthroughs, AI’s exponential curve seems to be catching its breath. But this isn’t a slowdown — it’s a strategic pause. With generative AI reaching a 'good enough' baseline, now is the moment to focus on structure, meaning, and human-guided scaffolding through knowledge graphs.

What is an Ontology?

Ontologies are the next step beyond schemas and knowledge graphs — flexible, logical frameworks that define meaning, enable reasoning, and power intelligent AI systems. Learn how they transform static data into structured knowledge.

ARC-AGI-2: The New Benchmark That Redefines AI Reasoning

Explore ARC-AGI-2, François Chollet’s groundbreaking benchmark for artificial general intelligence, and discover why symbolic reasoning and Knowledge Graphs are key to the next era of AI progress.

From Fractured Data to Connected Insights: Lessons from the Data Management Summit

Insights from the Data Management Summit London: why organisations must connect their data, embrace knowledge graphs, and define their ontological core to thrive in the AI era.

Transforming How Organisations See Data

Discover how large language models and knowledge graphs are transforming the way organizations leverage both structured and unstructured data. Learn how linking insights back to core business systems can unlock new capabilities and strategic advantages.

The Data Crunch

As AI accelerates through the economy, organisations with poorly integrated data systems will begin to show cracks. Disparate but entangled data quality issues will lead to unreliable AI insights and a loss of trust. Within a ten-year timeframe, many organisations may crumble under the strain of their fragmented infrastructures, losing relevance as their specific intelligence fades into the background intelligence of larger foundational models.